Introduction#

After the success of my previous blog entry about on-call, I have decided to cover a technical topic that was close to my heart as a C++ software engineer. Dear readers that are not familiar with C++ templates, compilation and so on - you are still welcome to stay and get some ideas on how to leverage LLM (Github Copilot) to address mundane tasks in software engineering.

tl;dr#

- Since C++ template were introduced, many authors have taken an herculean effort upon themselves to implement entire projects as a template.

- While C++ templates deliver many benefits, their drawbacks overshadow these benefits when exclusively and religiously deployed.

- Boost.Geometry, is an example for such a template mess. The unit tests take ages to build, and a tiny fraction of that time to run.

- Clang Modules - can improve the build time by ~30% out of the box. It does require some build system (CMake) massaging.

- We can do better. Roll up the sleeves and explicitly instantiate templates, AI tags along to help.

- Contact me to save build time and resources in your project, measured in real (cloud) compute

money.

C++ Templates and the header-only culture#

C++ templates were introduced in the first international standard for the language, c++98. The original purpose was to create generic functions and classes, that would work with any data type. Fast forward to c++11, templates can have a variable amount of types to instantiate. Since then, numerous projects decided to go as far as implement their entire library as a header123.

Benefits#

- Readability, centralized definition.

- Improved runtime when combined with function inlining. We pre-generate all the input and output type combinations of function implementations, during compilation time - that saves runtime on type conditioned function calls.

- Error detection during pre-processing (compilation), for

constexprtypes.

Some of these advantages become downsides, when templates are blindly stacked on top of other templates and the project’s

Drawbacks#

- Layers upon layers of template abstraction make it hard to predict the final code.

- Larger inlined functions create larger binaries.

- Compilation time explodes, when the amount of translation units is high and none of the definitions are external (delegated to the linker). There is also redundancy when certain types of classes or functions are repeatedly instantiated in different translation units.

As some of you probably already know, or devised from the drawbacks and the article title - the issue explodes when hundreds of unit test binaries are including deeply entrenched header-only libraries.

Boost.Geometry#

This leads me to Boost.Geometry unit tests. They include the header-only library into each test binary. Moreover each test is built with dozen of different compilers and different architectures for each compiler:

$ rg "(toolset|cxxstd|addrmd): [\w\-,\.]+" -o libs/geometry/.github/workflows/minimal.yml

...

74:toolset: clang-10

75:cxxstd: 14,17,2a

78:toolset: clang-11

79:cxxstd: 14,17,2a

82:toolset: clang-12

83:cxxstd: 14,17,2a

86:toolset: clang-13

...

$ rg "address" libs/geometry/.github/workflows/minimal.yml

274: $BOOST_ROOT/b2 toolset=${{ matrix.b2_toolset }} cxxstd=${{ matrix.b2_cxxstd }} variant=debug,release address-model=32,64 libs/geometry/test//minimal

...

On a simple machine:

$ lscpu|rg "Core|max MHz"

Core(s) per socket: 4

CPU max MHz: 3600.0000

It takes ages to build the entire test suite (boost-1.88.0), around half an hour. I decided to look deeper to understand what’s the culprit.

Crosses#

I took a simple unit test, crosses_gc.cpp:

void test_gc()

{

test_geometry<gc_t, gc_t>("GEOMETRYCOLLECTION(LINESTRING(0 0, 10 10))",

"GEOMETRYCOLLECTION(POLYGON((0 0,0 5,5 5,5 0,0 0)))",

true);

test_geometry<gc_t, gc_t>("GEOMETRYCOLLECTION(LINESTRING(0 0, 10 0))",

"GEOMETRYCOLLECTION(POLYGON((0 0,0 5,5 5,5 0,0 0)))",

false);

...

and checked what the clang pre-processor would generate after including all header and instantiating all templates. I was shocked that the source file would explode to almost half a million lines of code, no wonder it takes a while to compile:

$ clang++ $(echo $DEFINES_INCLUDES) -std=c++14 -E test/algorithms/crosses/crosses_gc.cpp > output.txt

$ cat test/algorithms/crosses/crosses_gc.cpp|wc -l

61

$ cat output.txt|wc -l

381926

This test suite, consisting of 3 small tests, takes around 30 seconds to build (after building the boost dependency libraries). The tests themselves take just double digit milliseconds to run:

$ time ninja test/algorithms/crosses/all

[6/6] Linking CXX executable test/algori...ses/boost_geometry_algorithms_crosses_gc

ninja test/algorithms/crosses/all 68.61s user 3.37s system 250% cpu 28.699 total

$ ninja test/algorithms/crosses/test

[0/1] Running tests...

Test project /Development/boost/libs/geometry/build/test/algorithms/crosses

Start 1: boost_geometry_algorithms_crosses

1/3 Test #1: boost_geometry_algorithms_crosses ....... Passed 0.01 sec

Start 2: boost_geometry_algorithms_crosses_gc

2/3 Test #2: boost_geometry_algorithms_crosses_gc .... Passed 0.01 sec

Start 3: boost_geometry_algorithms_crosses_sph

3/3 Test #3: boost_geometry_algorithms_crosses_sph ... Passed 0.03 sec

100% tests passed, 0 tests failed out of 3

Total Test time (real) = 0.05 sec

Can we do something about it with minimum modifications?

Clang module -fmodules#

I read around and found out that using Clang modules, it’s possible to reduce compilation time by up to 35% without modifying the code. I thought giving precompiled headers a try, but I did not want to mess with the structure of the pre-existing build setup.

Setup#

I decided to take the CMake build of the project and add some flags to the unit test build macro:

function(boost_geometry_add_unit_test prefix item)

set(unit_test_name "boost_geometry_${prefix}_${item}")

add_compile_options(

-fmodules -fimplicit-module-maps

-fmodule-map-file=${CMAKE_SOURCE_DIR}/include/boost/geometry/algorithms/module.modulemap

-fmodules-cache-path=${CMAKE_BINARY_DIR}/prebuilt

-Xclang -fdisable-module-hash

)

I use Clang Modules, not the standard C++ modules. Here’s what the flags mean:

-fmoduleenable Clang modules-fmodule-map-filepoints to themodule.modulemapfile the resides outside of the directory of tests, the actual header-only algorithmcrosses.-fmodules-cache-pathis used to control the cache location for the modules (.pcmfiles). Otherwise the modules are built in a system defined location.-fdisable-module-hashis used to be able to use the-fimplicit-modul-mapsflags. It allows the compiler to find the modules by plain name.

I also added the module.maps in the following directories:

$ git status|rg "module"

include/boost/geometry/algorithms/module.modulemap

test/algorithms/crosses/module.modulemap

test/module.modulemap

I just added the headers into the files, nothing fancy:

module BoostGeometryTestAlgorithmsCrosses {

header "test_crosses.hpp"

export *

}

Benchmarks#

I experienced a ~25% improvement in compilation time, with this simple setup:

$ hyperfine --runs 5 --prepare "rm-rf prebuilt;rm -rf test" "ninja test/algorithms/crosses/all" --export-markdown ../crosses_with_modules.txt

Benchmark 1: ninja test/algorithms/crosses/all

...

$ hyperfine --runs 5 --prepare "rm-rf prebuilt;rm -rf test" "ninja test/algorithms/crosses/all" --export-markdown ../crosses_without_modules.txt

With modules#

| Command | Mean [s] | Min [s] | Max [s] | Relative |

|---|---|---|---|---|

ninja test/algorithms/crosses/all | 22.155 ± 0.474 | 21.773 | 22.966 | 1.00 |

Without modules#

| Command | Mean [s] | Min [s] | Max [s] | Relative |

|---|---|---|---|---|

ninja test/algorithms/crosses/all | 29.168 ± 0.841 | 27.984 | 30.341 | 1.00 |

This is a great reduction considering that the full test suite runs around 30 minutes in this setup, for dozens of different compilers on two different architectures. A bit of a napkin math for this setup would show the following reduction per test run:

$$

30_{minutes} * 20_{tests} * 25\% = 150_{minutes}

$$

Unfortunately, building the entire test-suite this way breaks the compilation, as some modules are built with definitions and compilation options, that differ between different targets. If you want to stop here and collect the ~30% gain in exchange for building different variants of the modules for the entire test suite - be my guest. This applies to UNITY_BUILDs as well, as the project breaks similarly.

I want to explore the classic method of improving the compilation time with better gains and maybe automate some of the work using LLMs.

Explicit template instantiation#

Why? ftrace-time#

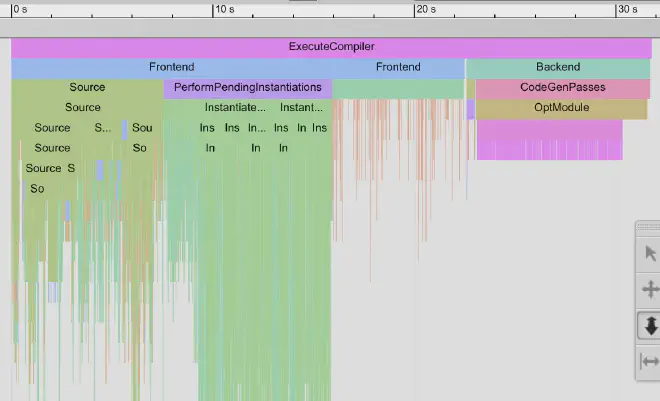

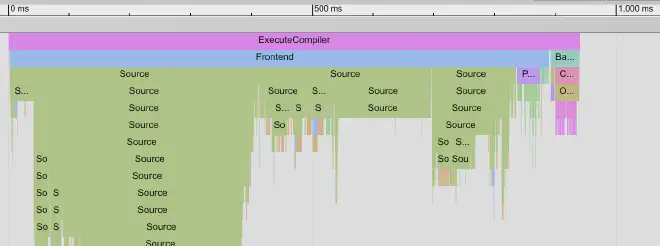

If we compile the tests with -ftrace-time, we can see that ~25% is used to traverse the included header files, another ~25% is used to instantiate the templates and then the rest of the time is used to generate the code:

We learned earlier how we can slash the 25% on traversing the header files. But do we really need to re-compile the templates over and over again?

Instead of letting the compiler traverse the depths of the template definitions, we can delegate templated function definitions to the linker, using the extern modifier. I have taken the previously mentioned test suite and added extern declarations:

template <typename Geometry1, typename Geometry2>

extern void test_geometry(std::string const &wkt1, std::string const &wkt2, bool expected);

Now we need to instantiate this function in a translation unit.

Copilot#

It’s a tedious job to manually go over all instantiations of the tests and add the explicit instantiation to a translation unit, even with multi-cursor.

Let’s ask Copilot to do it!

Plan#

I chose to explicitly instantiate the test template test_crosses as a demonstration. It would make more sense to instantiate and build the entire core Boost.Geometry library as it has components that are reused by the entire test suite. If we cache it correctly between builds we will not have to fully rebuild it each time.

Prompts#

Here can you find the complete prompts and diff.

I want to explicitly instantiate all calls to test_geometry in a new source file, build it and link it to the crosses test.

make test_geometry extern in crosses.cpp create a source file instantiate_test_crosses.cpp and inside it create: aliases for all types used in crosses.cpp a macro to instantiate test_geometry for both in and double

The result was quite good, it created the new file, and the macros (I will share only the important parts):

boost/libs/geometry/test/algorithms/crosses/instantiate_test_crosses.cpp

...

#define INSTANTIATE_TEST_GEOMETRY(T) \

template void test_geometry<multi_point_type<T>, linestring_type<T>>(std::string const&, std::string const&, bool); \

template void test_geometry<multi_point_type<T>, multi_linestring_type<T>>(std::string const&, std::string const&, bool); \

...

INSTANTIATE_TEST_GEOMETRY(int)

INSTANTIATE_TEST_GEOMETRY(double)

I asked it to correct crosses.cpp, and just use an extern template:

in crosses.cpp just make it template<typename A, typename B> extern … instead of mentioning all of them. Also remove the include of test_crosses.hpp and add the necessary includes for the types that are used to call test_geometry

It did:

...

#include <boost/geometry.hpp>

#include <boost/geometry/geometries/point_xy.hpp>

#include <boost/geometry/geometries/multi_point.hpp>

#include <boost/geometry/geometries/linestring.hpp>

#include <boost/geometry/geometries/multi_linestring.hpp>

#include <boost/geometry/geometries/polygon.hpp>

#include <boost/geometry/geometries/multi_polygon.hpp>

template <typename A, typename B>

extern void test_geometry(std::string const&, std::string const&, bool);

...

Now I asked it to take care of the CMake project:

in the CMakelists.txt build instantiate_test_crosses.cpp as a static library, link it into the tests in the foreach loop and add a dependency

It missed some parts, so I prompted it again to fill in the missing parts:

add PRIVATE to the linking, and also add all the missing includes path from CMakeLists.txt in test.

yes but now add “${PROJECT_SOURCE_DIR}/test” “${PROJECT_SOURCE_DIR}/index/test” as include dirs

Eventually, I stepped in and saved it.

Surprise, surprise it actually builds!

$ time ninja test/algorithms/crosses/boost_geometry_algorithms_crosses

[1/4] Building CXX object test/algorithm...try_algorithms_crosses.dir/crosses.cpp.[4/4] Linking CXX executable test/algor...osses/boost_geometry_algorithms_crosses

ninja test/algorithms/crosses/boost_geometry_algorithms_crosses 31.76s user 1.80s system 120% cpu 27.950 total

Results#

It takes the test crosses.cpp around a second and a half to fully build, less than a second to compile ~30x improvement over the original build, granted the boost dependencies were already built:

$ hyperfine --runs 5 --prepare "rm -f test/algorithms/crosses/boost_geometry_algorithms_crosses test/algorithms/crosses/CMakeFiles/boost_geometry_algorithms_crosses.dir/crosses.cpp.o" "ninja test/algorithms/crosses/boost_geometry_algorithms_crosses"

Benchmark 1: ninja test/algorithms/crosses/boost_geometry_algorithms_crosses

Time (mean ± σ): 1.427 s ± 0.015 s [User: 1.100 s, System: 0.322 s]

Range (min … max): 1.415 s … 1.453 s 5 runs

Needless to say, building all 3 tests together now takes longer, because all of them wait for the test_crosses_instantiation library to finish building first, in comparison to building all 3 tests in parallel. This use of a LLM was a demonstration for instantiating parts that are commonly used in the entire test suite.

Digest#

We started with a C++ project heavy on templates. Improved the build time using -fmodules. Then we improved further using LLMs to do some lengthy explicit template instantiations for us. This resulted in a ~30% improvement at first, followed by a 30x(!) improvement.

Alexey to the rescue#

Does your C++ project take ages to build? Your developers are climbing the walls out of boredom while waiting for a tiny change in the test to compile?

Contact me to save build time and resources in your project, measured in real (cloud) compute money.